Making artificial intelligence practical, productive, and accessible to everyone. Practical AI is a show in which technology professionals, business people, students, enthusiasts, and expert guests engage in lively discussions about Artificial Intelligence and related topics (Machine Learning, Deep Learning, Neural Networks, GANs, MLOps, AIOps, and more). The focus is on productive implementations and real-world scenarios that are accessible to everyone. If you want to keep up with the latest advances in AI, while keeping one foot in the real world, then this is the show for you!

What is the model lifecycle like for experimenting with and then deploying generative AI models? Although there are some similarities, this lifecycle differs somewhat from previous data science practices in that models are typically not trained from scratch (or even fine-tuned). Chris and Daniel give a high level overview in this effort and discuss model optimization and serving.

Changelog++ members save 2 minutes on this episode because they made the ads disappear. Join today!

Sponsors:

Featuring:

Show Notes:

Something missing or broken? PRs welcome!

Timestamps:

(00:07) - Welcome to Practical AI

(00:43) - Daniel at GopherCon & IIC

(03:31) - Local inference & TDX

(08:23) - Cloudflare Workers AI

(09:43) - Implementing new models

(16:14) - Sponsor: Neo4j

(17:11) - Navigating HuggingFace

(20:21) - Model Sizes

(24:34) - Running the model

(30:20) - Model optimization

(34:17) - Cloud vs local

(39:26) - Cloud standardization

(43:00) - Open source go-to tools

(46:21) - Keep trying!

(48:18) - Outro

Dominik Klotz from askui joins Daniel and Chris to discuss the automation of UI, and how AI empowers them to automate any use case on any operating system. Along the way, the trio explore various approaches and the integration of generative AI, large language models, and computer vision.

Changelog++ members save 6 minutes on this episode because they made the ads disappear. Join today!

Sponsors:

Featuring:

Show Notes:

Something missing or broken? PRs welcome!

Timestamps:

(00:05) - Welcome to Practical AI

(00:35) - Sponsor: Statsig

(04:12) - askui

(07:49) - What does data look like?

(09:53) - Tying in classification

(11:51) - Range of uses

(15:37) - Many platforms 1 approach

(16:42) - askui's practical approach

(20:22) - Deploying

(22:11) - Daniel's bad idea?

(25:49) - Handling input & output

(27:32) - Other tests

(29:04) - Sponsor:Changelog News

(30:45) - Facing AI challenges

(35:33) - Getting started

(37:37) - Roadmap challenges

(39:32) - Future vision

(41:32) - Conclusion

(42:16) - Outro

In this episode we welcome back our good friend Demetrios from the MLOps Community to discuss fine-tuning vs. retrieval augmented generation. Along the way, we also chat about OpenAI Enterprise, results from the MLOps Community LLM survey, and the orchestration and evaluation of generative AI workloads.

Changelog++ members save 1 minute on this episode because they made the ads disappear. Join today!

Sponsors:

Featuring:

Show Notes:

Something missing or broken? PRs welcome!

Timestamps:

(00:07) - Welcome to Practical AI

(00:43) - Practical AI & Friends

(02:01) - Look into MLOps community

(04:19) - Changes in the AI community

(07:34) - Finding the norm

(08:30) - Matching models & uses

(11:21) - Stages of debugging

(13:10) - Layer orchestration

(16:26) - Practical hot takes

(21:46) - Fine-tuning is more work

(24:13) - Retrieval augmented generation

(31:23) - MLOps survey

(38:09) - The next survey

(41:50) - Enterprise hypetrain

(42:39) - OpenAI & your data

(43:19) - AI vendor lock-in?

(47:44) - Now what do we do?

(48:58) - Hype in the AI life

(56:35) - Goodbye

(57:23) - Outro

You might have heard a lot about code generation tools using AI, but could LLMs and generative AI make our existing code better? In this episode, we sit down with Mike from TurinTech to hear about practical code optimizations using AI “translation” of slow to fast code. We learn about their process for accomplishing this task along with impressive results when automated code optimization is run on existing open source projects.

Changelog++ members save 2 minutes on this episode because they made the ads disappear. Join today!

Sponsors:

Featuring:

Show Notes:

Something missing or broken? PRs welcome!

Timestamps:

(00:07) - Welcome to Practical AI

(00:43) - Code optimizing with Mike Basios

(03:19) - Solving code

(07:24) - The AI code ecosystem

(10:41) - Other targets

(12:58) - AI rephrasing?

(15:28) - Sponsor: Changelog News

(16:40) - State of current models

(20:31) - Improvements to devs

(22:31) - Managing your AI intern

(25:09) - Custom LLM models

(29:49) - Biggest challenges

(33:19) - Hallucination & optimization

(35:42) - Test chaining?

(39:09) - LLM workflow

(41:25) - Most exciting developments

(43:40) - Looking forward to faster code

(44:14) - Outro

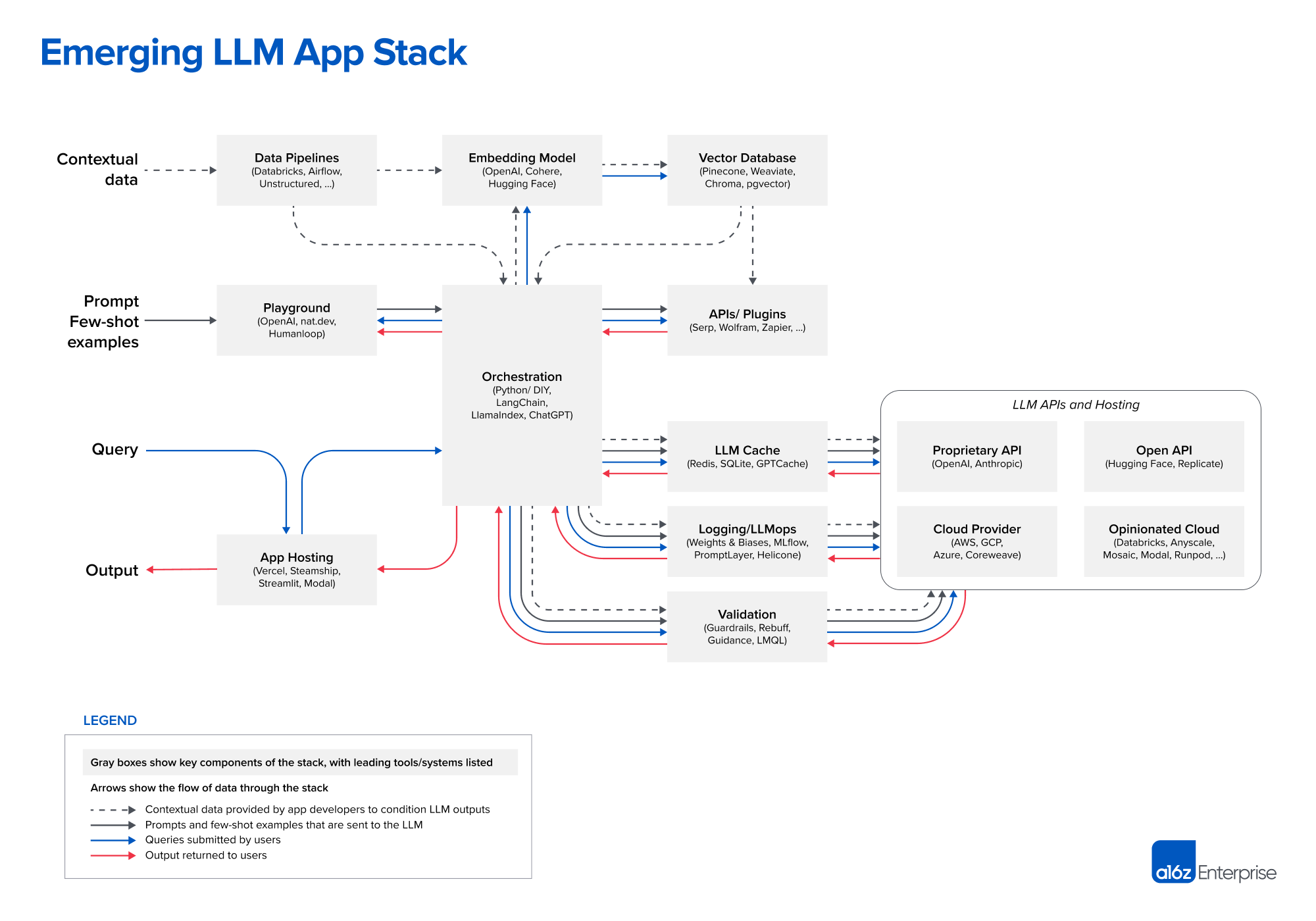

Recently a16z released a diagram showing the “Emerging Architectures for LLM Applications.” In this episode, we expand on things covered in that diagram to a more general mental model for the new AI app stack. We cover a variety of things from model “middleware” for caching and control to app orchestration.

Changelog++ members save 2 minutes on this episode because they made the ads disappear. Join today!

Sponsors:

Featuring:

Show Notes:

Emerging Architectures for LLM Applications

Something missing or broken? PRs welcome!

Timestamps:

(00:07) - Welcome to Practical AI

(00:43) - Deep dive into LLMs

(02:25) - Emerging LLM app stack

(04:35) - Playgrounds

(08:07) - App Hosting

(10:46) - Stack orchestration

(15:50) - Maintenance breakdown

(19:08) - Sponsor: Changelog News

(20:43) - Vector databases

(22:36) - Embedding models

(24:27) - Benchmarks and measurements

(26:59) - Data & poor architecture

(29:42) - LLM logging

(33:01) - Middleware Caching

(37:32) - Validation

(40:53) - Key takeaways

(42:36) - Closing thoughts

(44:23) - Outro

In this Fully Connected episode, Daniel and Chris kick it off by noting that Stability AI released their SDXL 1.0 LLM! They discuss its virtues, and then dive into a discussion regarding how the United States, European Union, and other entities are approaching governance of AI through new laws and legal frameworks. In particular, they review the White House’s approach, noting the potential for unexpected consequences.

Changelog++ members save 2 minutes on this episode because they made the ads disappear. Join today!

Sponsors:

Featuring:

Show Notes:

LEARNING RESOURCE!

Something missing or broken? PRs welcome!

Timestamps:

(00:00) - Welcome to Practical AI

(00:43) - We're fully connected

(01:41) - DIY Superconductor

(06:18) - Stable Diffusion XL 1.0

(09:40) - Minimum requirements

(11:19) - LLama2s

(12:24) - Water from a poisoned well

(14:58) - Sponsor: Changelog News

(16:07) - Policy makers

(17:12) - Letter to the EU

(20:55) - Fear on both sides

(23:53) - AI Bill of Rights

(28:52) - Human alternatives

(32:33) - AIRC compliance

(37:08) - Learning resources

(40:19) - Next adventure

(40:53) - Outro

There’s so much talk (and hype) these days about vector databases. We thought it would be timely and practical to have someone on the show that has been hands on with the various options and actually tried to build applications leveraging vector search. Prashanth Rao is a real practitioner that has spent and huge amount of time exploring the expanding set of vector database offerings. After introducing vector database and giving us a mental model of how they fit in with other datastores, Prashanth digs into the trade offs as related to indices, hosting options, embedding vs. query optimization, and more.

Changelog++ members save 3 minutes on this episode because they made the ads disappear. Join today!

Sponsors:

Featuring:

Show Notes:

Vector databases blog posts from Prashanth:

Something missing or broken? PRs welcome!

Timestamps:

(00:00) - Welcome to Practical AI

(00:43) - Prashanth Rao

(01:40) - What are vector DBs

(03:21) - Semantics and vectors

(04:20) - How vectors are utilized

(09:53) - Evolution of NoSQL

(15:52) - Sponsor: Changelog News

(17:53) - How do we use them

(20:37) - Use cases for vector DBs

(23:58) - Over-optimizing

(26:14) - Tradeoffs

(31:00) - Mature vendors

(33:04) - Speedrunning the blog points

(34:48) - In-memory vs on-disk

(40:43) - Environments

(44:21) - Exciting things in the space

(50:45) - Outro

It was an amazing week in AI news. Among other things, there is a new NeRF and a new Llama in town!!! Zip-NeRF can create some amazing 3D scenes based on 2D images, and Llama 2 from Meta promises to change the LLM landscape. Chris and Daniel dive into these and they compare some of the recently released OpenAI functionality to Anthropic’s Claude 2.

Changelog++ members save 1 minute on this episode because they made the ads disappear. Join today!

Sponsors:

Featuring:

Show Notes:

Learning resources:

Something missing or broken? PRs welcome!

Timestamps:

(00:00) - Intro

(00:43) - It's a different world

(02:16) - ZIP NeRF

(07:20) - Uses in eCommerce

(10:16) - Industrial usecases

(11:53) - Military ops?

(12:54) - Everyone can benefit

(14:28) - Connect the dots

(15:33) - LLaMA 2

(18:48) - Parameter limits

(21:48) - What do you need?

(23:59) - Why the jump?

(28:01) - Mark's anti-competetiveness

(31:51) - Walled garden?

(32:34) - Claude 2

(36:59) - Using different models

(39:54) - OpenAI vs Anthropic

(45:52) - Dig in!

(47:02) - Goodbye

(47:17) - Outro

As a technologist, coder, and lawyer, few people are better equipped to discuss the legal and practical consequences of generative AI than Damien Riehl. He demonstrated this a couple years ago by generating, writing to disk, and then releasing every possible musical melody. Damien joins us to answer our many questions about generated content, copyright, dataset licensing/usage, and the future of knowledge work.

Changelog++ members save 1 minute on this episode because they made the ads disappear. Join today!

Sponsors:

Featuring:

Show Notes:

Something missing or broken? PRs welcome!

Timestamps:

(00:07) - Welcome to Practical AI

(00:43) - Damien Riehl

(01:36) - Regulations on AI

(03:52) - All the music

(07:21) - Human vs AI output

(11:30) - Working alongside AI

(14:51) - Finding the gray area

(20:47) - Ai is not copyright-able

(24:19) - Where is the line drawn?

(27:42) - Snake eating its own tail

(30:08) - Can I use these models?

(31:15) - Lessening value of IP

(34:05) - No more patents

(35:55) - 4 Worlds

(38:27) - Scarcity or abundance?

(39:36) - Outrunning the tsunami

(41:26) - Goodbye

(42:05) - Outro

Chris sat down with Varun Mohan and Anshul Ramachandran, CEO / Cofounder and Lead of Enterprise and Partnership at Codeium, respectively. They discussed how to streamline and enable modern development in generative AI and large language models (LLMs). Their new tool, Codeium, was born out of the insights they gleaned from their work in GPU software and solutions development, particularly with respect to generative AI, large language models, and supporting infrastructure. Codeium is a free AI-powered toolkit for developers, with in-house models and infrastructure - not another API wrapper.

Changelog++ members save 1 minute on this episode because they made the ads disappear. Join today!

Sponsors:

Featuring:

Show Notes:

Something missing or broken? PRs welcome!

Timestamps:

(00:00) - Welcome to Practical AI

(00:43) - Hello Codeium

(01:31) - Varun's background

(03:21) - Anshul's background

(04:59) - Codeium's reason

(07:26) - Increasing GPU demands

(10:10) - Landing on the wave

(11:01) - Riding the wave

(13:45) - Codeium and Copilot

(15:59) - From help to Codeium

(17:35) - What made Codeium stand out?

(20:30) - How Codeium delivers a better UX

(23:54) - Privacy concerns

(27:56) - The practicality of Codeium

(31:13) - What users do

(35:56) - Where generative AI is headed

(40:52) - Goodbye

(41:15) - Outro